CrX Architecture Part-2

[CrX Architecture Cont..]

The cluster

It is hoped that clustered neurons represent the smallest truth, while others are just noise. So, by activating the smallest truth, we will always get an output, even if it is the wrong output, similar to the brain. By increasing the smallest truth, we can improve the output to more accurately manifest reality. And by allowing only the smallest truth/cluster to activate and move forward in the layer, we can obtain the right set of outputs.

Math behind clustering algorithm

The partitioned blocks serve as input for

clustering. Clustering occurs for random neurons within any partitioned blocks

that are close to each other. By clustering the closely arranged random neurons,

the algorithm fixes them into that position, while random neurons that do not

meet this criterion are set to normal again (they are not fixed). Only the

values (scaled pixel values) of active chained neurons that are surrounded by

clustered random neurons are allowed to move to the next layers in the network.

pixel values in the chained neurons that do not contain clustered random

neurons are not moved to the next layers in the network.

Ø Cluster’s role in destabilizing mechanism

Clustering is the final stage in the destabilizing mechanism. After clustering, except for the clustered neurons, every other neuron is set to normal. The only changes are made to the clustered neurons, which are fixed onto their positions. The clustered random neurons are not fixed permanently; instead, they follow a flexible fixing in that position. If after many iterations of different kinds of input into the model, the already clustered random neurons are not clustered again, then those random neurons in that cluster are dispersed into that space.

The clusters resemble the plasticity in our brain. If impulses do not activate a connection between two neurons for a long time, then any nearby impulse removes the connection (as without impulses, the connection weakens), and the neurons with the removed connection now connect to any nearby impulse neurons. Impulses are the food for the neuron; they are naturally attracted to it.

Ø Partition functions assistance in clustering

They filter the important clusters by partitioning only them. What is an important cluster? The cluster that is surrounding or nearby, close to the chained neuron, is an important random neuron. As they are directly attached to the chained neuron, these chained neuron activations, containing pixel values, represent a direct response to the pixel values of the input.

So, as the random neurons attach themselves

directly to the chained neuron, they represent the smallest truth. The

partitioning algorithm partitions only those closely arranged random neurons.

By this, they are further clustered by an algorithm, and then the clustered

random neurons direct the impulse (pixel values movement across layers)

direction between layers.

Ø What happens after clustering?

The clustering algorithm only clusters the random neurons that happen to be closer around the chained neurons. Once clustered, they are fixed onto that position, which is required to utilize the direction-changing abilities of the cluster. Only the chained neuron values that are surrounded by clustered random neurons are allowed to pass into the next layer within the same network. However, both clustered random neurons and chained neurons' pixel values are allowed to pass into the respective layers of the second network.

The random neurons that are not clustered are

set to normal. These random neurons are again normally attracted to other

chained neurons. Unclustered random neurons are not fixed onto that position.

Ø After clustering, Layers output to next layers and next network layers –

After clustering the nearby random neurons,

the clustered random neurons around the chained neuron become activated

neurons. Only the activated chained neurons' pixel input values are transferred

to the next layers of the same network. However, the transfer of output between

layers of different networks will involve clustered random neurons and the pixel

input values.

Impulse Direction changing abilities of

cluster (important mechanism, no error should be made in this mechanism) –

(To better understand this concept, read Linearity of Thoughts from BrainX)

To enhance understanding, we will refer to the pixel input values in the chained neuron as impulses. If there are clusters around the chained neurons, they are active because the random neurons that cluster perform the role of impulses present in the brain. These clusters can change, create, or destroy the direction for impulse traveling in the network layers. These changing abilities map the correct set of clusters to the inputs. And these changing abilities are performed only when clusters are nearby.

In our model/brain, the senses are what help to perform impulse manipulation. That is, each sensor collectively changes the direction of the impulse with the help of clustered chained neurons. Chained neuron paths are like multiple river paths, and each sensor's impulse is the incoming water force that changes the direction of the overall water flow. Direction will change only if there are potential nearby clusters to easily pass the impulses from multiple sources. If far-away, not every impulse will get to that neuron's pathway.

These nearby clusters hold significance because the inputs will store required clusters nearby, not far away. This statement is valid because the inputs will attract random neurons to form clusters if the input contains any value. There should be contrast with the background for this to happen, and for the object and background to be nearby, their values scaling by the distribution density function will have significant values nearby, not far away.

For example, if a tea cup sitting on a table is given as input, the significant values are vertical and horizontal continuous lines. This distinguishable continuity will set the distinct but important values to be nearby in positions inside the models layers. Therefore, the reason input will form clusters nearby is due to continuity in the input. If there is no continuity, then the input will not follow the laws of physics. The input will follow the order of nature, which contains continuity on both small and large scales. Whatever the input, there is definitely continuity in every input.

There is a another logic to be added into this

mechanism, currently this function connects with nearby clusters that have high

density. If this was the case, then everytime we have higher density clusters

to get activated. No, we want the right cluster to get activated. So for that

we have to include a connection or link after the source cluster finds any high

density nearby cluster for a first round in any layer. This link will act as

indicator to avoid the connected high-density cluster to get activated again,

this second time. The second high density cluster will get activated. And also,

we will include a refractory period type logic in this link, that is we set a

weight on these links like (some number) and insert a decay rate (linear or

exponential). In this way we can make the all clusters to get activated not

just always the high-density clusters.

even the brain does this like – first activate a large cluster (question type?

Why questions are related to primary emotions most of the time then the

answers, so they will be of large clusters) this connection goes refractory

period then nearby second large cluster gets activated (mostly an answer). At

some time, we will get to the answer straight then in that case the

intermediate impulse of questions is not got into input neurons, the answer

cluster was so nearby it preceded and activated the answer cluster to give

output. More like 5*5 = [4*5 (20) + 5] = 25 this intermediate step will not get

into input as soon the output will beat the intermediate step impulse and gets

into the frontline input neurons (or just output). Thus, making intermediate

not happened.

Math behind direction changing abilities of clusters

By allowing only the values of chained neurons

that are surrounded by clustered random neurons to pass into the next layers in

the same network, the ability to change direction can be achieved.

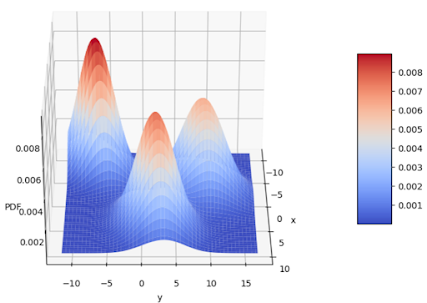

Ø Probability density function

It was

located after the encoder and before the decoder; the trainable PDF that was

trained in the encoder was transferred to the decoder as trained to make the

output correct. It was to mimic the neurons at the frontline of each sense.

These neurons get crowded at the place where higher impulse was entering into

the senses. So, importance was given to these impulses that enter via these

crowded regions, as these impulses have more energy to fully propagate through

the cycle. Higher valued regions get scaled by the arbitrarily trained value to

give the values from that region more importance. The ability to change slows

as more time passes, but it is not completely lost; it changes slower, similar

to the neurons in the brain. (Also, drastic change is not needed as reality

will not change drastically). Multiple PDF could also be used to get accurate

output if demanded by the model. As more PDF will collectively give more

importance to particular set of inputs/outputs. Also, crowdness of neurons in

the frontline input neuron will helps in increasing the intensity of the

impulse and thus finally helps in propagation of the impulse. PDF will try to

mimic this property,

The goal is to

learn a function that assigns a "probability" score to each location

in this grid, such that locations with important/relevant patterns get higher

scores, and locations with less useful patterns get lower scores.

Ø Tuneable focusing parameter

There should be a function that focuses or

scan and give out only values of the important regions (that is higher

intensity regions) from the trainable probability density function into the

first network layers. It was to eliminate noise from signal in the input. And

it was tuneable parameter that can set to value when the input signal was

correctly captured.

And there should be a real-time changeable surface layer on top of each peaks of the trainable probability density function. It was to refine the focusing parameter to few important chained neurons. Because as more the function gets trained there is a higher probability that equal importance was given to many chained neurons. I think it will reduce the probability of correctly gathering the patterns for the input.

Ø Biologically - inspired explanation

Neurons in the brain are clustered together by the effect of impulses traveling in any neuron. These impulses promote attraction and make connections with nearby neurons by activating them to release chemicals and synthesizing receptors for accepting the synapse. After clustering nearby neurons together, this path of clustered neurons acts as an important contributor to impulse transmission & manipulation by attracting more impulses. If any input in the environment generates more impulses along these clustered pathways compared to other pathways, then that input is processed in the brain. If any input with more impulse that is not passing along these clustered pathways, then that impulse is less likely to get into output or this impulse converge to the regularly attended clustered pathways. These clusters change the direction of the impulse correctly, activating a certain set of input cells in the input region (refer to the BrainX theory for understanding the above concept).

Therefore, this process of clustering and the clustering pathways abilities of changing direction were incorporated into this model to process input and solve problems.

The Wiggle Connection

This is the method to combine the required

clusters that are present in two different layers in the first network. The

combined cluster serves as input to the second network layers, and these

clusters will get fixed in the respective layers of the second network.

Math behind the wiggle connection

The function of the wiggle connection is to

merge the clusters from two layers (the current and previous layer) into a

single layer that contains all the clusters from these two layers, along with

the pixel values of the clustered active chained neurons only. This newly

created layer is then transferred as input to the current layer of the second

network. In the second network layers, all the functions and algorithms of the

first network layers occur, including block division, partitioning, clustering,

and the passing of pixel values of clustered active chained neurons.

Ø Similarities of clusters in layer and in between layer

The layers will cluster only the random neurons that are closer together. This action will be helpful in the direction-changing abilities of the cluster to activate a certain set of clusters at each layer. However, there is a problem: clusters in a single layer will not be enough to solve a problem. Every layer consists of different clusters because there is continuous updating of clusters in both networks. This continuous update assists in problem-solving.

These different clusters in each layer will

help to find the right set of clusters in the layer. Therefore, in order to

combine these clusters, we need a wiggle connection. This wiggle connection

combines the different clusters from two layers and combines them into a single

layer in the second network. The output from the second network is then

returned as input to the first network. In this way, the first network will

store the right set of different clusters that can actually solve the problem,

which is done by the mechanism of the wiggle connection.

Ø How it will happen –

The wiggle connection only works for two-layer output. If there are five layers in the first network, each output goes into the wiggle connection. The wiggle connection takes each output from the layers. If the current layer contains any previous layer's output or the current layer is preceded by the output of previous layers, then both the output of the previous layer and the current layer are combined into a single clustered output and placed into the respective layers of the second network. For example, the output of the first layer is allowed to pass through without any modification by the wiggle connection and is transferred as-is to the respective first layer of the second network.

When the output of the second layer is passed through the wiggle connection, it modifies the output by taking the previous first layer's output and merging these two layers' outputs into one output, which is then transferred as output to the respective second layer of the second network. This process continues for all the layers of the first network.

This concept assumes that clusters are

different in each layer, and they will be different after many iterations of

training. The model is capable of finding the smallest truth from the input,

which can be found by a suitable destabilizing mechanism.

Ø How wiggle connection changes the layers clusters?

There is a final output concept in this

architecture. If the output from the second network does not change much, or

there is not much difference in the two outputs, then the model should consider

that output as the final output. It should then stop the loop of transferring

the output between layers of the same network and different networks. Now, the

model should halt all operations, such as looping and forming clusters, until

the next input is presented to the model.

Ø How wiggle connection increases the correct output generation rate

By combining the saved clusters that represent

the smallest truth of reality, the correct generation output rate can be

increased. The major assumption is that each layer initially saves the same

kind of clusters that represent the smallest truth, as few inputs were given to

the model. However, after giving many inputs, the loop running for every input

will change the clusters of each layer of both networks. This results in each

layer saving different clusters that represent different smallest truths. By utilizing

the property of changing direction ability of each cluster in the layers, the

model can use both functions and retrieve the right set of clusters at each

layer. This is achieved by leveraging the changing direction abilities of

clusters and merging these retrieved clusters into one output by the wiggle

connection. The output is then transferred into a second network, and the

difference in each output is checked to finalize the output. This process

increases the probability of generating the right output.

Ø Biologically - inspired explanation

The brain follows a simple mechanism that

efficiently solves problems. Let me state the mechanism: impulses from the

external environment enter the brain, and as these impulses cycle inside the

brain, inevitable connections form between input impulse and output impulse

neuron clusters. This allows the answer to appear quickly to the observer,

making shortcuts to the answer by bypassing full computation and directly

connecting to the output after undergoing full computation iterations. This

process is followed for all inputs consistently. This process is a sure-to-happen

mechanism given the neurons biological properties.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

Comments

Post a Comment